The Complete Operating System Course: Understanding Core Concepts and Beyond

Welcome to our adventure through the world of operating systems! Think of an operating system (OS) as the unsung hero of our digital lives, it’s the mastermind behind every computer, seamlessly bridging the gap between the tangible hardware we touch and the intangible software we interact with. This is not just a lecture series; it’s an exploration of the core of computing, to reveal the workings of operating systems, resource management, and the hidden layer that allows for software applications.

Table of Contents

From the moment you press the power button on your computer or swipe the screen of your smartphone, you’re engaging with an operating system. These sophisticated software systems provide the foundation of any computer setup, controlling anything from opening a text file to running huge distributed systems over numerous servers. They ensure that your computer’s CPU, memory, and storage are used effectively, that your data is protected from illegal access, and that the system is stable to avoid crashes.

We will cover everything from the basic ideas of operating systems to the specifics of memory allocation, file systems, process control, and more. You will study not just the “what” but also the “how” and “why” of operating systems through a combination of theory and hands-on practice.This course is suitable for everyone, whether you are a computer science student, a professional looking to broaden your technical expertise, or simply an inquisitive mind curious in how digital gadgets work.

As we conclude our course, I hope you experience a sense of completion and excitement. Operating systems are the silent major figures that support our digital world. This course has given us a behind-the-scenes look at how these systems manage resources, perform activities, and store data while prioritizing efficiency, dependability, and security.

Course topics that will be further Eleborated

Introduction to Operating Systems

Understanding the Basics

Evolution of Operating System

Types of Operating Systems

Single-User Operating Systems

Multi-User Operating Systems

Real-Time Operating Systems

Process Management in Operating Systems

Process Creation and Termination

CPU Scheduling Algorithms

First-Come, First-Served (FCFS) Scheduling

Shortest Job First (SJF) Scheduling

Shortest Remaining Time First (SRTF) Scheduling

Longest Job First (LJF) Scheduling

Longest Remaining Time First (LRTF) Scheduling

Highest Response Ratio Next (HRRN) Scheduling

Round Robin Scheduling

Priority Scheduling

Various States of Processes

Process Control Block (PCB)

Schedulers and Types of Schedulers

Process Synchronization

Race Condition and Critical Section

Criteria for Synchronization Mechanisms

Lock Variable and Test and Set Lock (TSL)

Turn and Interest Variables

Semaphores

Counting Semaphores

Binary Semaphores

Practice Problems on Synchronization Mechanisms

Deadlock

Deadlock and Conditions

Deadlock Handling Strategies

Banker’s Algorithm and Deadlock Avoidance

Resource Allocation Graph and Deadlock Detection

Practice Problems on Deadlock

Memory Management in Operating Systems

Contiguous Memory Allocation

Non-Contiguous Memory Allocation and Paging

Page Table and Page Table Entry

Paging Important Formulas and Practice Problems

Optimal Page Size, Formula, and Practice Problems

Translation Lookaside Buffer (TLB) and Multilevel Paging

Practice Problems on Paging and Multilevel Paging

Page Fault and Page Replacement Algorithms

Practice Problems on Page Fault and Page Replacement

Belady’s Anomaly and Important Results

Segmentation and Segmented Paging

Practice Problems on Segmentation and Segmented Paging

Disk Scheduling and Management

Disk Structures and Components

Disk Scheduling Algorithms

First-Come, First-Served (FCFS)

Shortest Seek Time First (SSTF)

SCAN

C-SCAN

LOOK

C-LOOK

File System Organization and Management

File System Basics

File System Structure

Boot Control Block (BCB)

File Allocation Table (FAT)

Inode Table

File Access Methods

Sequential Access

Direct Access

Indexed Access

Conclusion

Recap of Key Concepts

Future Trends in Operating Systems

1. An Introduction to Operating Systems

Every computer, from powerful servers to smartphones, has an operating system (OS), which is a complicated piece of software that controls hardware resources and offers services to application applications.

Operating systems are critical to the operation of all applications, from the most basic text editor to the most complicated networked software systems. They are in charge of managing the computer’s hardware resources, including as the CPU, memory, and storage, and ensuring that these resources are distributed fairly and efficiently among the numerous applications that operate on the system. Furthermore, operating systems assure the security and stability of our computing environments by safeguarding the system’s data and resources from unwanted access and controlling program execution to avoid errors and crashes.

2. Types of Operating Systems

In the broad world of computing, operating systems (OS) are classified according to their design, functionality, and the sorts of computing environments they are meant to govern. This segmentation is critical for both students and professionals to grasp since it emphasizes the variety and flexibility of operating systems to satisfy various needs. Operating systems are classified into three types: single-user operating systems, multi-user operating systems, and real-time operating systems, each with its own set of functions and applications.

A) Single-user operating Systems

Are designed to handle computer resources for one user at a time. These systems prioritize the user experience by allocating system resources, such as CPU, memory, and storage, to execute the user’s programs efficiently and smoothly. Examples include desktop operating systems such as Windows and macOS, which are frequently used in personal computing situations where only one user interacts with the machine at any time. These systems are designed for ease of use, dependability, and performance in tasks ranging from document editing to multimedia processing.

B) Multi-User Operating Systems

Enable numerous users to share computer resources over a network. These systems are designed to effectively handle resources, ensuring equal access and good performance for all users. Operating systems such as UNIX and Linux are examples of multi-user environments in which system resources are shared by multiple users, each with a unique set of privileges and capabilities. These systems are widely utilized in enterprise contexts, educational institutions, and servers, providing a solid foundation for applications that require simultaneous access by several users.

C) Real-Time Operating Systems (RTOS)

Are intended to process data as it comes in, usually within a very short duration. These systems are vital in applications that require precise timing, including embedded systems, medical devices, control systems for industry, and robotics. RTOS are distinguished by their accuracy and reliability, which ensure that activities are completed within the time limitations. Unlike general-purpose operating systems, real-time operating systems prioritize task scheduling and interrupt management to fulfill the strict timing requirements of real-time applications.

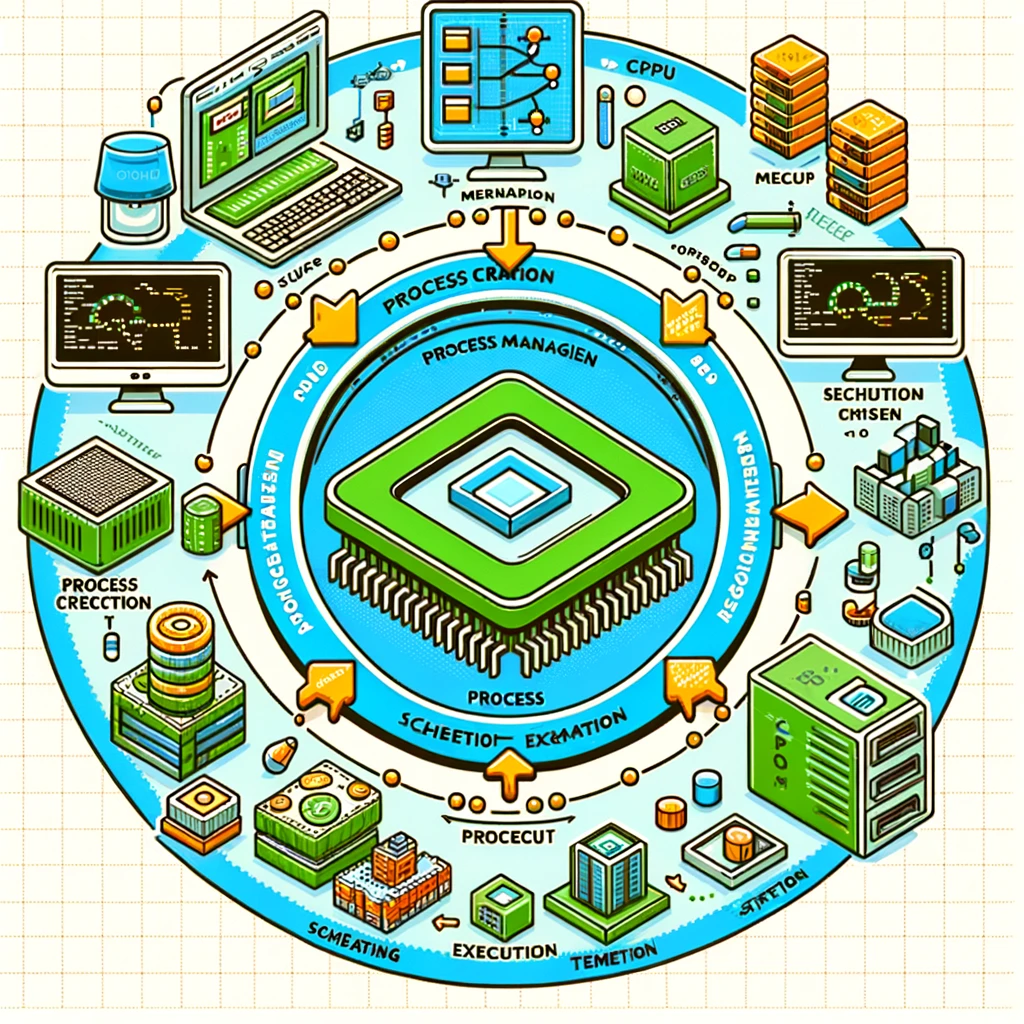

3. Process Management in Operating Systems

Process management is at the heart of operating system functionality, a sophisticated and critical component that guarantees the computer’s CPU can execute several tasks efficiently. This aspect of operating systems is critical for both students and professionals to understand since it demonstrates how operating systems handle program execution, multitasking, and resource allocation among active processes.

Process management consists of numerous important operations, including process creation, scheduling, execution, and termination. The operating system employs sophisticated algorithms to schedule activities, determine which process runs at any given time, and manage the transfer of processes between their many lifecycle stages. This is critical in both single- and multi-user operating systems, where the goal is to maximize CPU utilization efficiency while also ensuring that all users or tasks receive appropriate attention from the system within a reasonable time limit.

An operating system’s major responsibility in process management is to guarantee that each process has access to the CPU, memory, and I/O devices it needs to run without interfering with other processes. This necessitates complicated coordination, particularly in contexts where numerous apps are running concurrently. The operating system must allocate resources fairly and efficiently, avoid deadlock situations in which programs wait indefinitely for resources, and manage process priority levels to guarantee that vital tasks receive rapid attention.

Furthermore, in real-time operating systems, process management is more complex. To achieve the strict temporal limitations, these systems require not only efficient but also reliable scheduling. Real-time process management solutions are intended to ensure that time-sensitive operations are done by the deadline, which is vital for applications requiring high levels of reliability and predictability, such as embedded systems in medical devices or automobile control systems.

Understanding process management is essential for understanding how operating systems ensure that a computer system runs smoothly and efficiently, regardless of workload. This knowledge is not just essential for computer science and information technology students, but also for professionals building software that must efficiently use system resources or work within the limits of a real-time operating system. Anyone wishing to grasp the fundamentals of operating system functionality must take this course since it focuses completely into the details of process management and provides insights into the algorithms and methods used by operating systems to manage many processes.

4. Process Synchronization

As we go deeper into the complexities of operating systems, the concept of process synchronization emerges as a foundation for efficient and error-free process management. This crucial element ensures that numerous processes can run concurrently without interference, ensuring data consistency and system integrity. Process synchronization is especially important in multi-user and multi-tasking contexts, where processes frequently need to share resources or communicate with one another to fulfill tasks.

Process synchronization uses techniques to manage the execution sequence of processes, preventing race condition ( a situation in which the outcome of processes is determined by the order in which they are executed ), resulting in unpredictable consequences. Operating systems use synchronization mechanisms like semaphores, mutexes (mutual exclusion), and monitors to deal with this. These tools provide a formal method for controlling access to shared resources, guaranteeing that only one process can access a resource at a time or that specific requirements be met before allowing a process to proceed.

Semaphore is a powerful synchronization solution that controls access to shared resources using a simple integer value. Semaphores are classified into two types: binary, which functions as a mutex with values of 0 or 1, signaling whether or not a resource is available; and counting, which extends the notion to allow numerous accesses up to a predefined limit. On the other hand, mutexes are locking mechanisms that guarantee mutual exclusion by limiting the number of threads or processes that can access resources at once. This prevents inconsistent data and guarantees secure interactions between running processes.

Another important feature of process synchronization is the concept of deadlock, in which a group of processes are stalled, each waiting for resources held by the others, resulting in a loop of dependency with no resolution. Operating systems seek to avoid deadlocks by employing careful resource allocation strategies and deadlock detection algorithms, ensuring that the system stays responsive and efficient.

Understanding process synchronization is critical for comprehending how operating systems handle the difficulties of concurrent execution, ensuring data integrity, and preventing system failure. This understanding is essential not only for computer science and information technology students, but also for professionals creating applications that require concurrent access to shared resources. By investigating the methods and tactics utilized by operating systems to accomplish process synchronization, students can obtain a better grasp of the ideas that underpin efficient and reliable system design.

5. Deadlock Management

Deadlock management is an important topic in operating system study because it handles deadlock prevention, avoidance, and resolution. Deadlocks arise when a series of processes are all waiting on resources held by the others, resulting in a standstill in which none of the processes can move forward. This important feature of operating system architecture is necessary for guaranteeing system stability and efficiency, particularly in complex computing environments where different processes often interact with shared resources.

Deadlock management in operating systems is tackled using a variety of methodologies, each aiming to attack the deadlock problem from a different perspective. These tactics include deadlock prevention, avoidance, discovery, and recovery. Deadlock prevention entails designing the system in such a way that the prerequisites for deadlock are consistently avoided. This can be accomplished by setting resource allocation limitations, such as requiring processes to request all necessary resources at once, hence avoiding the periodic wait state that causes deadlocks.

Deadlock avoidance, on the other hand, uses techniques such as the Banker’s Algorithm, which dynamically examines the system’s state before allocating resources to guarantee that the system does not enter an unsafe state. These algorithms require knowledge of future resource requests by processes, making them more sophisticated but highly successful in preventing deadlocks before they happen.

When deadlocks cannot be prevented or avoided, operating systems must rely on deadlock detection and recovery. stalemate detection methods run at regular intervals to look for cycles of interdependent processes that indicate a stalemate. When a deadlock is found, the system must take action to recover, which frequently requires terminating or rolling back any number of processes to free up resources and break the deadlock loop.

Understanding deadlock management is critical for learners to understand how operating systems ensure that operations run smoothly and the system remains stable. This expertise is essential not only for computer science and information technology students, but also for system designers and application developers. By studying deadlock management principles and approaches, participants in this course can help to design more dependable and efficient operating systems and applications, as well as improve their problem-solving skills and technical competence in the field.

6. Memory Management

Memory management is a fundamental feature of operating systems that is essential for improving computer efficiency and effectiveness. It entails allocating and deallocating memory spaces to various programs and processes, ensuring that they have the resources they need to run properly while making the most use of the system’s physical memory. This complex procedure is critical for the stability of operating systems and the seamless execution of software applications.

The memory management subsystem of the operating system is in charge of various critical functions including as memory allocation, paging, segmentation, and virtual memory administration. These techniques collaborate to manage the system’s memory resources, ensuring that each activity has access to the memory it requires while avoiding interference with other processes. Memory allocation strategies, such as dynamic allocation for runtime requests, are intended to assign memory blocks to processes in a way that reduces waste and maximizes speed.

Paging and segmentation are strategies for optimizing memory usage and allowing the execution of processes that demand more memory than is physically accessible. Paging splits memory into fixed-size blocks that contain pieces of processes, whereas segmentation breaks processes into segments based on their logical divisions, such as code, data, and stack segments. Both strategies allow the operating system to fit huge processes into accessible memory locations, hence increasing system responsiveness and multitasking capabilities.

Another important concept in memory management is virtual memory, which allows the operating system to extend physical memory by using hard drive capacity. This generates the illusion of a much wider memory area, allowing many programs to run at the same time and improving the system’s ability to deal with huge applications. Virtual memory management uses paging and segmentation to map virtual addresses to physical addresses, merging disk storage and RAM to improve performance.

Understanding memory management is critical for anyone seeking to expand their knowledge of operating systems. This knowledge not only explains how operating systems enhance hardware efficiency, but it also offers insights into the construction of performance-optimized apps. This course will introduce students to the ideas and techniques of memory management, allowing them to assess and enhance the memory consumption of operating systems and applications, ultimately improving the machine’s performance and dependability.

7. Disk Scheduling and Management

Disk scheduling and management are key components of operating systems, with the goal of optimizing disk drive activities to improve overall system performance and efficiency. This section discusses the methods and algorithms used by operating systems to control read and write operations on disk, ensuring that data is accessible in the most efficient way possible. Given the mechanical nature of hard drives and the latency involved in moving the read/write heads, effective disk scheduling solutions are critical for reducing reaction times and increasing throughput.

Operating systems use a variety of disk scheduling algorithms to determine the order in which disk I/O requests are performed. These algorithms include First Come, First Served (FCFS), Shortest Seek Time First (SSTF), and Elevator (SCAN), among others. Each method has advantages and is appropriate for different conditions. For example, FCFS is straightforward and equitable, but it may not always deliver the quickest service. SSTF minimizes average search time by processing the request closest to the current head position first, however it may result in starving of other requests. The Elevator algorithm, on the other hand, reduces seek times by moving the disk arm in one way as far as necessary before reversing and processing all requests in the opposite direction, much like an elevator going between floors.

Disk management also includes the organization and structure of files on a drive, such as partitioning and file systems. Partitioning divides the disk into parts that can be controlled independently, allowing multiple file systems to coexist on the same drive or separating operating system files from user files to improve security and performance. The file system, in turn, controls how files and directories are stored, allowing users and applications to store, organize, and retrieve data. File systems can differ widely in complexity, features, and efficiency, with examples being FAT (File Allocation Table), NTFS (New Technology File System), and ext3/ext4 (third/fourth extended filesystem), among others.

Understanding disk scheduling and management is significant for both students and professionals because it provides insight into how operating systems manage one of the most important resources: data storage. Efficient disk management improves not only the performance of individual applications, but also the operating system’s overall stability and efficiency. This course will teach students the ideas behind disk scheduling algorithms and disk management strategies, allowing them to evaluate and optimize storage solutions in a variety of computing scenarios.

8. File System Organization and Management

Operating systems rely heavily on file system organization and administration to ensure that data is efficiently stored, managed, and accessible on storage devices. This feature of operating systems is critical for the logical layout of files and directories, resulting in an organized environment that supports both user and system tasks. A file system’s organization consists of defining how data is stored in files, how these files are labeled, managed, and accessed by the system and users, and how data integrity is maintained.

A well-designed file system allows for efficient data retrieval and storage, supports security measures, and ensures data integrity through error detection and correction mechanisms. Operating systems utilize a variety of file systems, each with its own set of characteristics and tailored to certain storage needs. For example, the File Allocation Table (FAT) file system, which is extensively used in external storage devices, is both simple and versatile. In comparison, the New Technology File System (NTFS) allows for higher disk storage, file permissions for security, and error recovery via journaling. The ext3 and ext4 file systems, which are widely used in Linux settings, provide writing-down journals, high performance, and massive file system support.

File system management entails tasks including generating, deleting, reading, and writing files, as well as controlling permissions and attributes that govern how files are accessed and utilized. It also supports metadata management, which describes file features like as creation and update dates, as well as size. Efficient file system management ensures that the system can handle enormous amounts of data while yet providing rapid access times and reliability.

Operating systems must handle not only files and directories, but also the physical storage space in which they are kept. This includes techniques such as partitioning, which splits a disk into manageable portions, and formatting, which prepares the storage medium for usage by creating a basic file system structure.

Anyone interested in operating systems must first understand how file systems are organized and managed. This knowledge not only explains how data is stored and accessed, but it also emphasizes the importance of the file system in ensuring data security, reliability, and efficiency. This course will provide students with an understanding of various file systems, as well as how to efficiently pick and manage them based on the requirements of certain applications or environments. This understanding is critical for optimizing system performance while also assuring data integrity and security in computer systems.

9. Conclusion

When we end the course, we have covered the key concepts that make up the architecture and functionality of operating systems. This course has provided a comprehensive journey into the world of operating systems, beginning with an overview of operating systems and progressing through the complexities of process management, synchronization, and deadlock management, as well as the intricate mechanisms of memory, disk scheduling, and file system organization and management.

Operating systems are the foundation of computers, managing the interaction of hardware and software to provide the seamless functioning we rely on every day. This course has provided students with insights into how operating systems manage resources, perform activities, and store data to ensure efficiency, dependability, and security. The knowledge gained here establishes the framework for subsequent research into specific topics like as operating system design, system programming, and the creation of programs that are optimized for various computer environments.

Furthermore, this course emphasized the importance of operating systems in the larger context of information technology and computer science. Understanding the ideas and mechanics of operating systems is vital not only for students and professionals in these professions but also for anybody wishing to gain a better understanding of digital systems overall. As technology evolves, the principles covered in this course give a solid foundation for adapting to new computing paradigms and advances.